Our computers are sexist towards male and female politicians

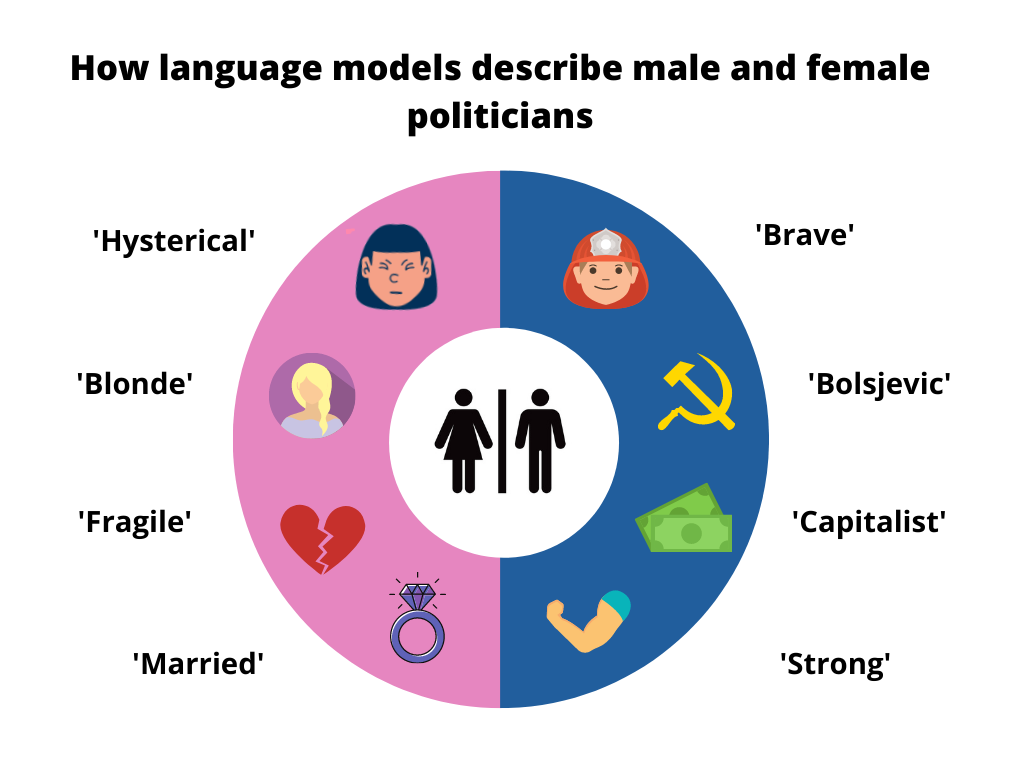

Female politicians are often described as 'beautiful' or 'hysterical' in language models, while descriptors like ‘brave' and 'independent' are elicited for their male counterparts. A new analysis by researchers from the University of Copenhagen makes this problem abundantly clear. According to the researcher behind the study, sexist and stereotypical descriptions of gender are a problem for gender equality.

When Google dishes out search term suggestions or helps us translate texts from Danish into English, we interact with language models – a kind of algorithm – that try to figure out the next most likely word in a sentence.

However, a new research project supported by Danmarks Frie Forskningsfond and prepared by researchers at the University of Copenhagen, Cambridge University and ETH Zürich demonstrates that language models turn out to have a gender bias when it comes to describing male and female politicians.

Indeed, our internet-fed computers often link female politicians with words that describe their appearance, weaknesses and marital or family status, all while describing male counterparts in terms of their drive, decisiveness and political convictions, explains one of the researchers behind the research project.

“Female politicians are often described as 'beautiful' or 'hysterical' in language models, while descriptors like ‘brave' and 'independent' are elicited for male politicians. This is a problem for equality in our society. Language models that treat genders differently can reinforce the way in which we think and talk about each other in real life,” says Isabelle Augenstein, who is an associate professor at the University of Copenhagen’s Department of Computer Science and head of the Natural Language Processing section.

Together with the research team, Augenstein is analyzing a huge dataset of how 250,000 politicians are described in different language models across six languages: English, Arabic, Hindi, Spanish, Russian and Chinese. The Danish part of the analysis has yet to be completed, but similar trends are expected.

Unmarried women and strong men

Using Wikidata - a sister project of Wikipedia containing knowledge about people in a structured format - the researchers found a list of the many politicians that have been included.

They then fed the politicians' names to a language model trained to predict the next most likely adjective in the sentence. So far, they have been able to conclude that the models, across all six languages, often suggest words that are related to appearance and family relationships when it comes to female politicians.

“Words describing family or marital status, such as 'divorce', 'unmarried', 'pregnant' or 'mother' are used far more for female politicians than for men. The same goes for words like 'blond' and 'beautiful',” explains Isabelle Augenstein.

Male politicians, on the other hand, are more frequently described by their work as politicians and job performance, even if not always in flattering terms. Augenstein elaborates:

“'Capitalist', 'strong', 'Bolshevik' and 'militant', are some of the terms that language models associate with male politicians, according to the preliminary study.”

“Ethically speaking, this becomes a problem if we desire a society where politicians are described by their professional qualifications and work performance, as opposed to their appearance or family dynamics. So, when men are described vis-à-vis their actions and women by their looks, it is a bias that we ought to take a very close look at,” states Augenstein.

Language models should be trained carefully

Language models draw on data from sources including websites, social media and Wikipedia. As such, the way we write about each other affects the responses we receive from Google and other search engines.

Augenstein points out that this makes it a challenge to overcome the stereotypical and sexist categorizations of female and male politicians.

“The solution is multi-faceted. We need to try to be polite when composing text online. At the same time, we should consider basing language models on data that contains fewer sexist and racist terms. Here in Denmark, we could base models on text data from high-quality sources such as DR, the Danish Broadcasting Corporation, where the language standards are generally higher than on social media. Other countries could do something similar,” she concludes.

Why language models are important

- We interact with language models whenever we're online and search engines, for example, provides us suggestions for search terms or translates a text for us.

- Furthermore, NLP applications based on language models can create summaries of large texts, which can be useful for local and regional governments that need to have an overview of the large quantity of data about their citizens. Language models are also used to sort through job applications for companies.

- According to Isabelle Augenstein, it is important for us to ensure that these language models contain as little sexist and racist language as possible.

Contact

Isabelle Augenstein

Associate Professor

Department of Computer Science

University of Copenhagen

+45 93 56 59 19, augenstein@di.ku.dk

Ida Eriksen

Journalist

Faculty of Science

University of Copenhagen

+4593516002, ier@science.ku.dk